We need to change rules and institutions, while still promoting innovation, to protect people from faulty AI

A California jury may soon have to decide. In December 2019, a person driving a Tesla with an artificial intelligence driving system killed two people in Gardena in an accident. The Tesla driver faces several years in prison. In light of this and other incidents, both the National Highway Transportation Safety Administration and National Transportation Safety Board are investigating Tesla crashes, and NHTSA has recently broadened its probe to explore how drivers interact with Tesla systems.

Getting the liability landscape right is essential to unlocking AI’s potential. Uncertain rules and potentially costly litigation will discourage investment in, and development and adoption of, AI systems. The wider adoption of AI in health care, autonomous vehicles and in other industries depends on the framework that determines who, if anyone, ends up liable for an injury caused by artificial intelligence systems.

Yet, liability too often focuses on the easiest target: the end-user who uses the algorithm. Liability inquiries often start—and end—with the driver of the car that crashed or the physician that gave faulty treatment decision. The key is to ensure that all stakeholders—users, developers and everyone else along the chain from product development to use—bear enough liability to ensure AI safety and effectiveness—but not so much that they give up on AI.

Second, some AI errors should be litigated in special courts with expertise adjudicating AI cases. These specialized tribunals could develop an expertise in particular technologies or issues, such as dealing with the interaction of two AI systems . Such specialized courts are not new: for example, in the U.S., specialist courts have protected childhood vaccine manufacturers for decades by adjudicating vaccine injuries and developing a deep knowledge of the field.

South Africa Latest News, South Africa Headlines

Similar News:You can also read news stories similar to this one that we have collected from other news sources.

Sort your entire photo library with this AIWith Excire Foto 2022, you can tidy up your image database with just a few clicks of the mouse.

Sort your entire photo library with this AIWith Excire Foto 2022, you can tidy up your image database with just a few clicks of the mouse.

Read more »

Tesla lays off nearly 200 Autopilot employees who help train the company’s AILabelling data is essential for developing many AI systems

Tesla lays off nearly 200 Autopilot employees who help train the company’s AILabelling data is essential for developing many AI systems

Read more »

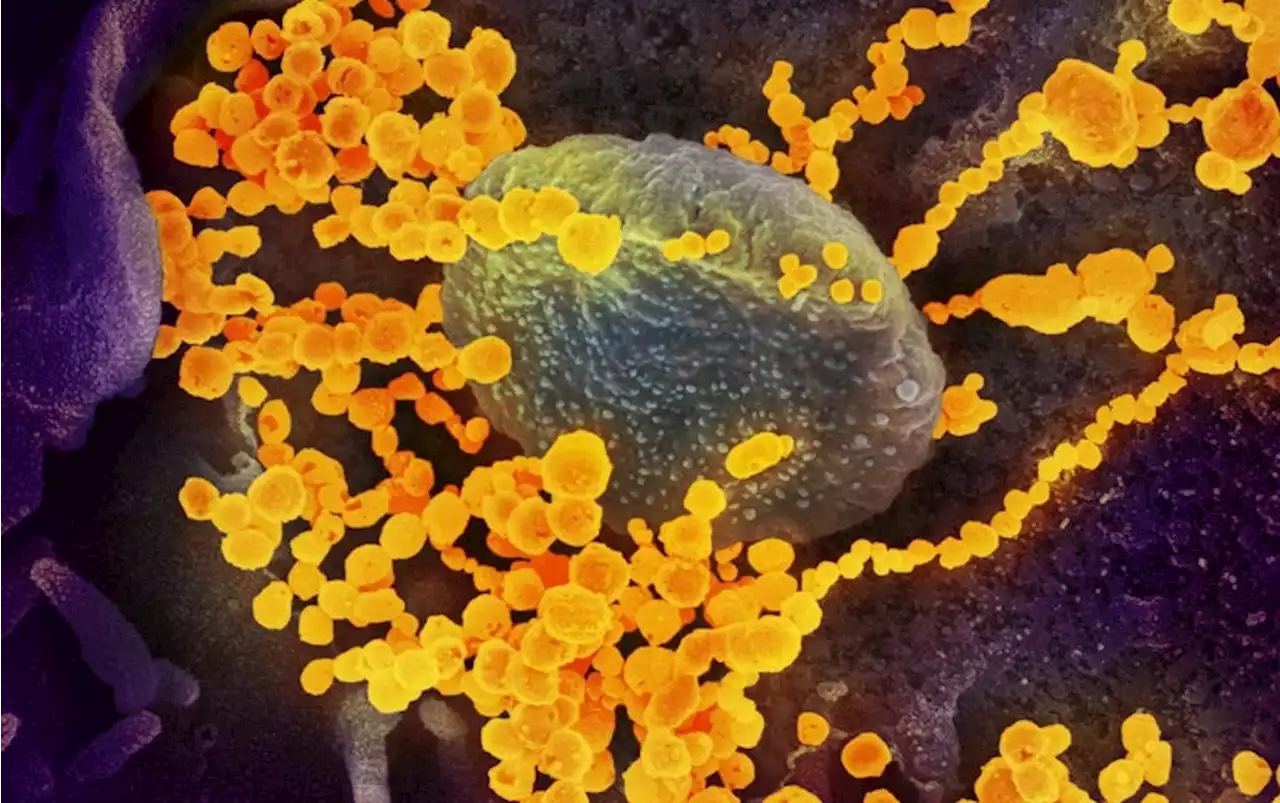

This AI Tool Could Predict the Next Coronavirus VariantThe model, which uses machine learning to track the fitness of different viral strains, accurately predicted the rise of Omicron’s BA.2 subvariant and the Alpha variant

This AI Tool Could Predict the Next Coronavirus VariantThe model, which uses machine learning to track the fitness of different viral strains, accurately predicted the rise of Omicron’s BA.2 subvariant and the Alpha variant

Read more »

Using Simulations Of Alleged Ethics Violations To Ardently And Legally Nail Those Biased AI Ethics Transgressors Amid Fully Autonomous SystemsA new means to detect AI Ethics violations consists of simulating an AI system to try and detect or predict that AI Ethics violations could arise. This could be used for example in the case of AI-based self-driving cars.

Using Simulations Of Alleged Ethics Violations To Ardently And Legally Nail Those Biased AI Ethics Transgressors Amid Fully Autonomous SystemsA new means to detect AI Ethics violations consists of simulating an AI system to try and detect or predict that AI Ethics violations could arise. This could be used for example in the case of AI-based self-driving cars.

Read more »

Tesla lays off nearly 200 Autopilot employees who help train the company’s AILabelling data is essential for developing many AI systems

Tesla lays off nearly 200 Autopilot employees who help train the company’s AILabelling data is essential for developing many AI systems

Read more »

Todd: Canadian cops far less likely to kill, or be killed, than U.S. counterpartsAnalysis: Canadian police are often accused of using excessive force, but a U.S. officer is five times more likely than a Canadian cop to shoot and kill.

Todd: Canadian cops far less likely to kill, or be killed, than U.S. counterpartsAnalysis: Canadian police are often accused of using excessive force, but a U.S. officer is five times more likely than a Canadian cop to shoot and kill.

Read more »